Similar Posts

Microsoft Unveils Powerful Phi-4 Model as Open Source on Hugging Face: A Game Changer in AI Technology!

Recent research on the Phi-4 model reveals that smaller, well-designed AI models can match or surpass the performance of larger ones, challenging the belief that bigger is better in AI. Phi-4’s advantages include lower computational requirements, cost-effectiveness, and faster training times. Rigorous comparisons show that it achieves similar accuracy, excels in processing speed, and adapts better to various datasets. These findings could reshape AI development by prioritizing model design over size, leading to more efficient and sustainable AI solutions. Embracing these insights could open new avenues for innovation in the field.

Alienware Unveils Powerful Aurora Laptops Perfect for Mid-Range Gamers

Dell’s Alienware division is set to launch the highly-anticipated Aurora class of gaming laptops, its first new line in nearly 20 years. Aimed at serious gamers, these laptops promise advanced specifications, sleek designs, and enhanced cooling systems. Key features include high-performance hardware, customizable RGB lighting, premium audio, and long battery life. While the release date has not been announced, updates are expected soon. The Aurora series is anticipated to redefine the gaming experience, making it an exciting development for enthusiasts worldwide. Stay tuned for more information on this groundbreaking launch.

Unlocking the Secrets of Transformers: The Powerhouse Behind AI Model Innovation

Transformers have revolutionized machine learning and artificial intelligence, particularly in developing Large Language Models (LLMs). Their architecture, featuring self-attention mechanisms, allows for efficient processing of sequential data by weighing the significance of words based on context. Comprising an encoder-decoder structure and layer normalization, transformers enhance comprehension and performance across various applications, including Natural Language Processing (NLP), image processing through Vision Transformers, and reinforcement learning. They are foundational to LLMs like GPT-3 and BERT, which excel in understanding and generating human language. As research progresses, transformers promise to drive future innovations in AI technology.

Future of Enterprise AI: Insights from Top Leaders at VB Transform 2025

At VB Transform 2025, AI executives convened to shape a transformative agenda focused on practical insights in artificial intelligence. Key highlights included actionable insights applicable to real-world scenarios, opportunities for networking with industry leaders, and discussions on future trends. Topics addressed were ethical AI, enhancing business efficiency through AI, and innovations in AI for healthcare. The event facilitated collaboration and idea exchange among participants, emphasizing the importance of these discussions for advancing the AI industry. Overall, VB Transform 2025 proved to be a vital platform for sharing valuable strategies and insights to drive innovation in AI.

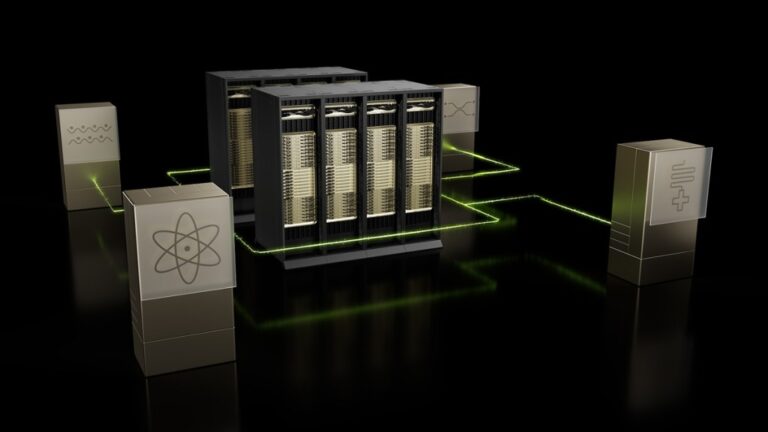

Nvidia Unveils Groundbreaking Accelerated Quantum Computing Research Center

Nvidia is establishing a new research center in Boston to advance quantum computing technologies. This initiative aims to position Nvidia as a leader in the field by focusing on groundbreaking research, fostering collaboration with academic institutions and industry experts, and tapping into the region’s talent pool. The center’s objectives include developing innovative solutions that enhance computational capabilities, potentially transforming sectors like healthcare, finance, and artificial intelligence. Nvidia’s investment in quantum computing not only strengthens its portfolio but also contributes to the future of computing technology. For more updates, visit Nvidia’s official page or check the Tech News section.

Transforming AI into Industry: Overcoming Challenges to Harness the Power of AI Factories

The AI infrastructure landscape faces significant challenges similar to traditional factories, necessitating attention for optimal performance and scalability. Key issues include high power requirements, as AI systems consume substantial energy; scalability, where systems must adapt to growing workloads; and reliability, crucial for performance in critical sectors like healthcare and finance. Organizations should invest in energy-efficient solutions to minimize costs and environmental impact. Adopting cloud-based resources can enhance scalability, while robust monitoring systems can improve reliability by preemptively addressing potential issues. Effectively tackling these challenges is vital for maximizing the potential of AI technologies.