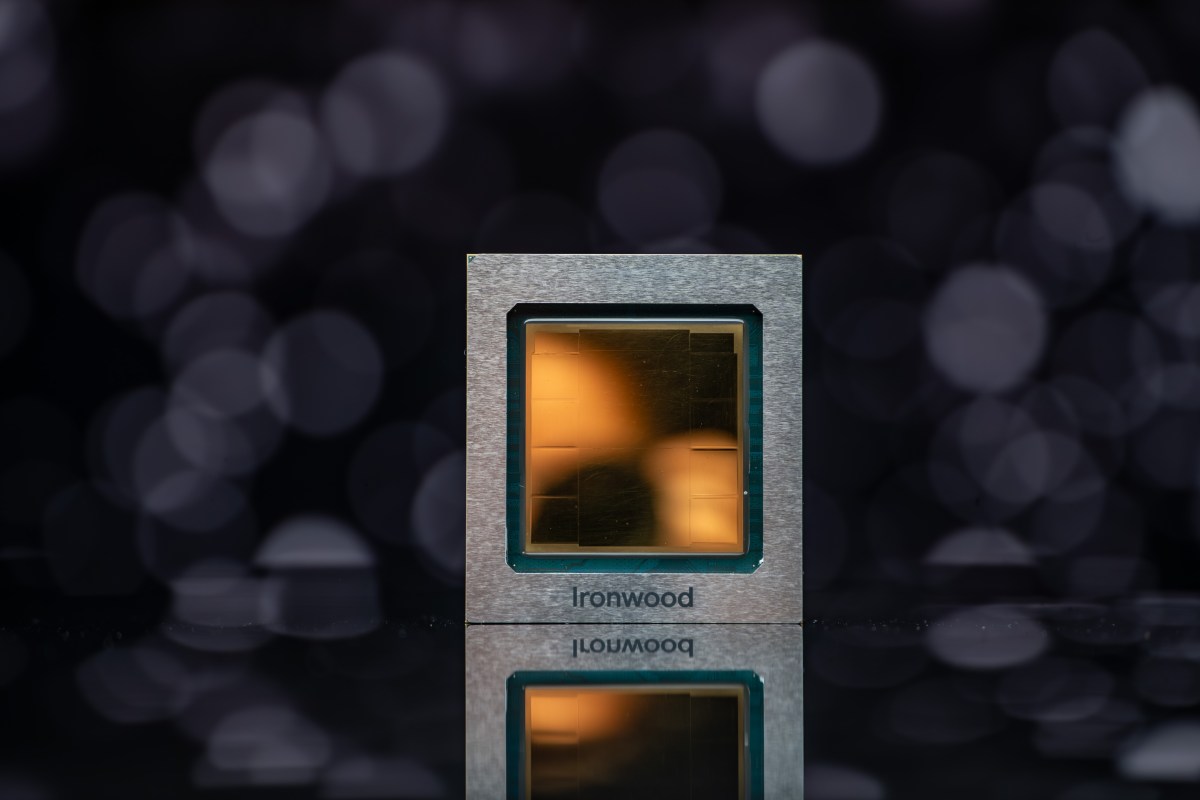

Discover Ironwood: Google’s Cutting-Edge AI Accelerator Chip Revolutionizing Technology

At the recent Cloud Next conference, Google introduced its newest innovation in artificial intelligence technology: the Ironwood TPU AI accelerator chip. This seventh-generation chip marks a significant advancement, specifically optimized for inference tasks, which are essential for running sophisticated AI models.

Introducing Ironwood: Google’s Latest TPU Chip

The Ironwood TPU is set to launch later this year for Google Cloud customers and will be available in two powerful configurations: a 256-chip cluster and a 9,216-chip cluster.

Key Features of the Ironwood TPU

- Peak Performance: Ironwood can achieve up to 4,614 TFLOPs of computing power.

- Memory Capacity: Each chip is equipped with 192GB of dedicated RAM.

- High Bandwidth: The chip features bandwidth close to 7.4 Tbps.

According to TechCrunch, Google Cloud VP Amin Vahdat stated, “Ironwood is our most powerful, capable, and energy-efficient TPU yet. It’s purpose-built to power thinking, inferential AI models at scale.” This chip is particularly designed to handle the complex data requirements associated with advanced ranking and recommendation tasks—such as algorithms that suggest products based on user preferences.

The Competitive Landscape of AI Accelerators

As the demand for AI capabilities grows, competition in the AI accelerator market intensifies. While Nvidia currently holds a leading position, other tech giants, including Amazon and Microsoft, are also developing their in-house solutions. Amazon has rolled out its Trainium, Inferentia, and Graviton processors via AWS, while Microsoft provides Azure instances for its Cobalt 100 AI chip.

Innovative Design for Enhanced Efficiency

One standout feature of the Ironwood TPU is its SparseCore, a specialized core that optimizes data processing for common AI tasks. Google emphasizes that the TPU’s architecture is designed to minimize data movement and latency on-chip, leading to significant power savings.

Future Integrations with Google Cloud

Google plans to enhance Ironwood further by integrating it with its AI Hypercomputer, a modular computing cluster within Google Cloud, as mentioned by Vahdat. He remarked, “Ironwood represents a unique breakthrough in the age of inference, with increased computation power, memory capacity, networking advancements, and reliability.”

For more details on Google Cloud’s latest innovations, visit Google Cloud.

As the technology landscape evolves, Ironwood is poised to play a crucial role in the future of AI inference processing, making it an exciting development for developers and businesses alike.